OpenAI has abruptly disabled a controversial ChatGPT feature following a major privacy scare that saw private user conversations unexpectedly surface in public Google Search results.

The incident, uncovered in late July 2025, has sparked widespread criticism of OpenAI’s handling of user data and reignited conversations about transparency, privacy, and ethical boundaries in AI deployment.

What Sparked the Concern?

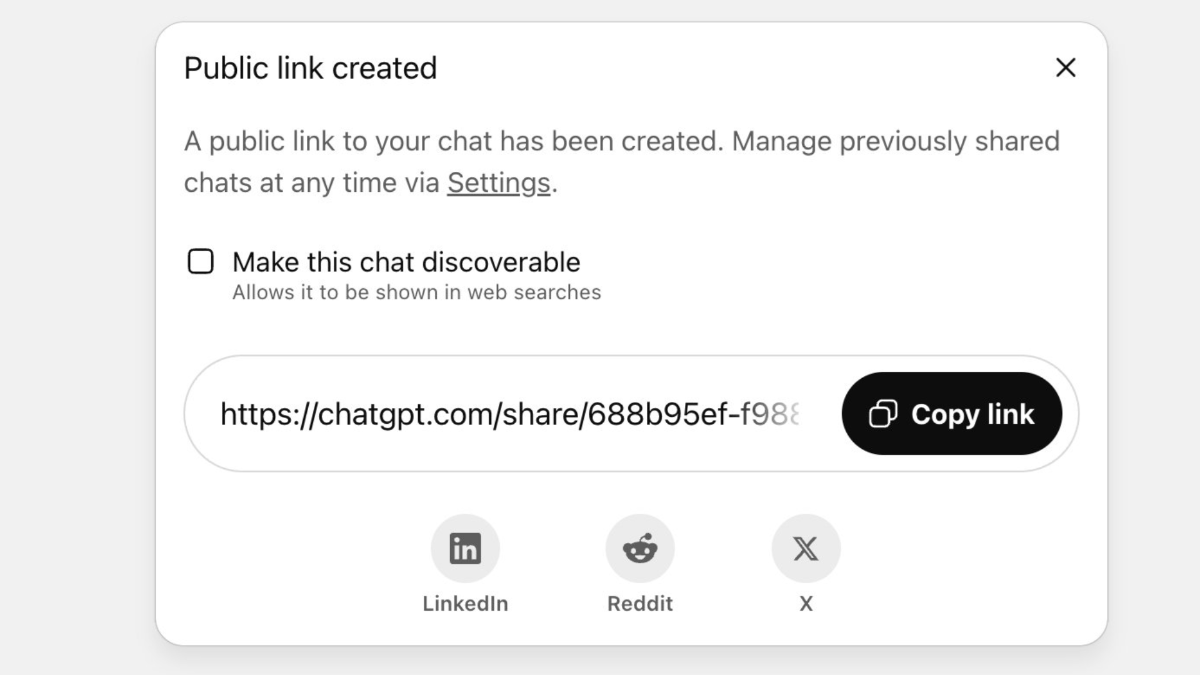

The controversy stems from a sharing tool OpenAI quietly rolled out to ChatGPT users. This feature allowed people to generate a public link to share any ChatGPT conversation. However, the tool included a checkbox, sometimes labeled “Make this chat discoverable”, which, if enabled, allowed search engines like Google to index the content.

Many users misunderstood this setting, assuming the shared chats would remain private or accessible only to those with the direct link. Instead, thousands of shared ChatGPT conversations became visible to anyone conducting a basic Google search. Several media outlets, including Fast Company and TechRadar, discovered links containing highly sensitive content ranging from job performance reviews and confidential business details to deeply personal mental health discussions simply by searching “site:chat.openai.com/share.”

OpenAI’s Swift Response

As the issue gained media attention and users began raising alarms, OpenAI acted quickly:

The “Make this chat discoverable” feature was fully removed within hours of the public outcry.

OpenAI began collaborating with search engines like Google to scrub already indexed ChatGPT links from their databases.

Users were advised that deleting a chat from ChatGPT’s history does not remove the public link. Each shared conversation must be manually deleted from the user’s shared links dashboard to ensure it's taken offline and delisted.

Despite the fast action, some links may remain temporarily visible due to search engine caching and delays in removal processes. Experts have warned that once a link is indexed and potentially scraped by third parties, full removal may not be guaranteed.

How Did This Happen?

OpenAI’s original intent was to make useful AI-generated conversations more discoverable, enabling users to learn from shared examples, particularly in educational and technical domains. But the execution backfired.

The interface did not clearly explain the consequences of enabling discoverability. The subtle placement of the option, vague wording, and lack of proactive warnings meant that users often clicked the box without fully understanding what it meant. As a result, what was framed as a harmless sharing feature quickly became a privacy hazard.

OpenAI has since described the rollout as a “short-lived experiment,” acknowledging that it underestimated the risks and failed to design the experience with enough user protection in mind.

A Wake-Up Call for AI Platforms

This episode is more than a design oversight, it’s a cautionary tale for how emerging AI tools interact with human behavior and expectations of digital privacy.

For users, the incident underlines a simple but often ignored reality: conversations with AI tools, while they feel personal, are not inherently private. Any option to share, store, or export should be approached with the same caution as emails or social media posts. Once content is made public, control is largely lost.

For tech companies, this is a lesson in responsible feature deployment. OpenAI's misstep shows how a small checkbox can lead to real-world consequences, especially when deployed at scale to millions of users.

For the wider public, it raises ethical questions around default settings, user comprehension, and the balance between innovation and safety. Privacy in the age of AI isn’t just about secure servers, it's about clear communication, transparent design, and the assumption that users may not always read the fine print.

What Should Users Do Now?

If you’ve shared a ChatGPT conversation in the past, it’s wise to review your shared links. Go to the ChatGPT settings, navigate to “Data Controls,” and manually delete any links you don’t want public. Deleting the chat itself isn’t enough, the shared link lives on unless removed separately.

You can also request Google and other search engines to remove cached versions of any lingering content via their "Remove Outdated Content" tools.

“Ultimately we think this feature introduced too many opportunities for folks to accidentally share things they didn’t intend to, so we’re removing the option. Security and privacy are paramount for us...” OpenAI (July 2025)

As OpenAI works to clean up the unintended consequences of this feature, the company’s rapid rollback has been welcomed but it also reinforces a deeper truth: in the AI era, privacy must be built in from the beginning, not patched in after the damage is done.

Discussion

Start the conversation

No comments yet

Be the first to share your thoughts on this article. Your insights could spark an interesting discussion!